In Brief

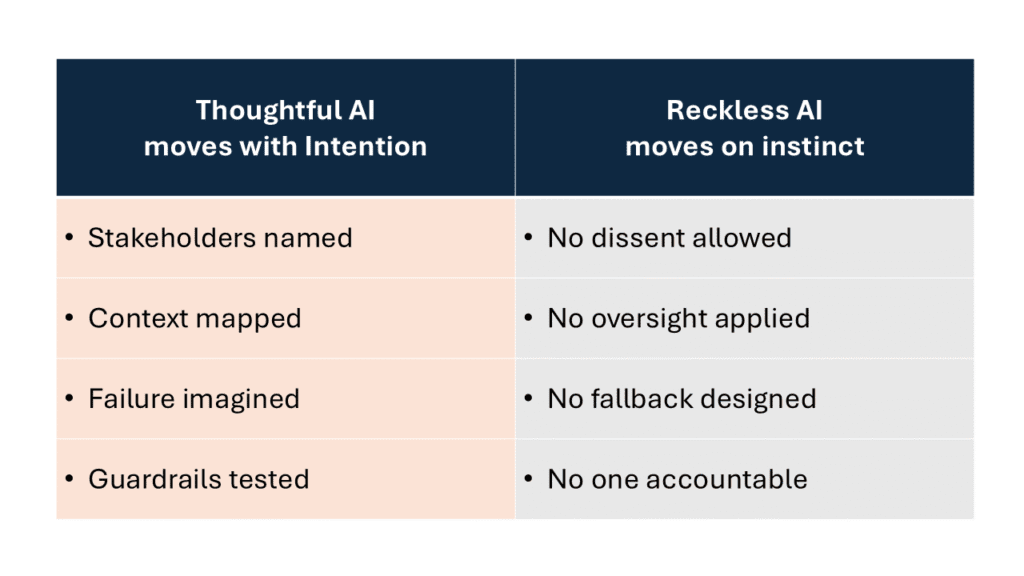

- Agentic AI is accelerating innovation cycles, but speed without reflection risks systemic failure, reputational damage, and ethical blind spots.

- Design Thinking and Critical Thinking, applied as operational disciplines, are essential to building accountable, resilient, and human-centered AI.

- A new model for AI deployment, Discover, Design, Deliberate, and Deploy, offers a pathway to thoughtful velocity and long-term trust.

Agentic AI has emerged not as an evolution, but as a revolution. With systems that compose, adapt, and act autonomously, businesses are captivated by the speed at which solutions can be imagined, prototyped, and deployed. Pretrained foundation models, composable workflows, low-code/no-code interfaces, and open-source libraries have fundamentally altered the innovation lifecycle. What once took quarters now takes weeks, sometimes days. This rapid evolution of AI presents a hopeful future, where solutions can be developed and deployed at an unprecedented pace.

But behind the acceleration lies an uncomfortable truth. Speed is outpacing thoughtfulness. Generative systems trained on the past are being launched into the future, without adequate testing, guardrails, or reflection. The illusion of control, masked by code and dashboards, is undermining the very trust these systems aim to build. It’s crucial for us, as AI developers and decision-makers, to ensure that our speed of deployment does not compromise the thoughtfulness and responsibility in our AI practices.

We are at an inflection point. The AI conversation must urgently shift from focusing solely on model performance to emphasizing the purpose behind these models. We need to transition from merely delivering products to fostering responsible management and guidance of AI systems. This change is about moving from building faster to thinking more thoughtfully. This shift in the AI conversation is not just a necessity but also an immediate opportunity to inspire change and innovation in our approach to AI.

Design Thinking and Critical Thinking, practiced not as platitudes but as disciplines, offer the methodological counterweight to reckless deployment. Together, they create the architecture for a hopeful future of thoughtful velocity. One that protects human dignity, organizational integrity, and the long-term viability of Agentic AI.

“Code moves machines. Design moves minds. The UX of today is needed for the AI of tomorrow. As Agentic AI accelerates at machine speed, only human-centered design can ensure we’re building agents that reflect human values, not just technical capability.”

— Roger Rohatgi, Chief AI Officer at Chai

That sentiment echoes across academia and enterprises alike. As Dr. Fei-Fei Li of Stanford puts it:

“Human-centered AI is not just a discipline. It’s a necessity.”

— Dr. Fei-Fei Li

The Rush to Prototype and The Illusion of Progress

The velocity of AI development today often outruns organizational readiness. With tools like LangChain, Azure OpenAI Studio, and AutoGen, teams can compose intelligent agents in hours, sometimes without the time or structure to validate how those agents behave in real-world conditions. The result is a growing number of pilots launched prematurely under the banner of innovation, but lacking the scaffolding of risk modeling, human validation, or downstream accountability.

Recent examples underscore the dangers. Air Canada was ordered to compensate a customer after its AI-powered chatbot provided false information about bereavement fares, misleading the user and violating the airline’s policies. The company claimed the bot was “a separate legal entity,” but the tribunal held Air Canada accountable for its AI agent’s misstatements. In another sector, fashion e-commerce firms have faced trust issues as generative chatbots hallucinate competitor recommendations, misrepresent product availability, or fabricate brand claims, eroding consumer confidence.

In these cases, the speed of deployment masked a critical absence of design and foresight. The pilots were technically effective, but the systems failed to function correctly. Not because of code, but because of context.

“Motion without understanding is not progress.”

— Gryphon Citadel

And in the Agentic AI era, unchecked acceleration doesn’t just create technical debt, it creates reputational risk that no debugging sprint can fix.

Where Foundation Models Fall Short – Pattern Without Purpose

Foundation models, those trained on vast corpora of internet-scale data, are undeniably powerful. They generate plausible sentences, suggest next-best actions, and simulate human-like reasoning with remarkable fluency. However, they also reflect an epistemological gap. They recognize patterns, rather than purpose.

By design, these models extrapolate from history. They reflect the biases, omissions, and cultural imbalances embedded in the datasets that trained them. Their understanding is statistical, not causal. Their confidence scores are probabilistic, not evidentiary. And their behavior is reactive, not intentional.

A model may confidently assert that a patient presenting with chest pain is male because historically, male-presenting data dominated the training set. It may suggest legal precedents from jurisdictions are irrelevant to the case at hand. It may create email summaries, chatbot responses, or medical intake forms that “sound right” while being factually or ethically flawed.

These aren’t just bugs. They’re misaligned defaults. Situations arise where the AI defaults to a decision or action that is not aligned with its intended purpose or ethical standards, and these are often difficult to detect until after the damage is done.

Too often, organizations mistake a clean API and a confident tone for reliability. They rely on technical validation, such as token accuracy, hallucination rates, and test prompts, while neglecting ethical oversight, cultural scrutiny, and the design of appropriate fail-safes.

The issue isn’t that these models are flawed. The problem is that we are overconfident in our ability to anticipate what will go wrong.

Why Design Thinking Matters Now More Than Ever

In a world of synthetic outputs and algorithmic actors, Design Thinking is not just a creativity tool; it is a discipline of intentionality. At its core, Design Thinking is about understanding the human system in which technology will operate, and creating solutions that honor that context.

Empathy, long treated as an abstract virtue, becomes a rigorous practice in Design Thinking: Who will interact with this agent? Under what conditions? With what assumptions, fears, constraints, and expectations? This human-centric approach is at the core of Design Thinking in AI development.

Design Thinking reframes:

- From users to stakeholders. Not just those who click, but also those who rely on, regulate, audit, or are affected by the system.

- From functionality to experience. How does the AI’s tone, timing, and fallibility shape trust?

- From features to implications. What workflows are changed? What decisions are displaced? What power is shifted?

In an Agentic era, these are not “soft skills.” They are the rigid constraints of responsible innovation. Limitations and guidelines that ensure AI development is not only innovative but also ethical, reliable, and beneficial to all stakeholders.

Organizations that embed design early, via co-creation workshops, participatory risk assessments, and narrative prototyping, reduce costly rework and avoid reputational risk. They trade blind spots for clarity. They replace cleverness with coherence.

“As design leaders, we are stewards of trust. We must be aware of intellectual property, bias, and ownership. Ethical decision-making becomes a critical part of our role. We are responsible for the work we put out in the world.”

— José Coronado

The Role of Critical Thinking in AI Readiness

If Design Thinking helps us frame what to build, Critical Thinking challenges us to ask why we are building it in the first place. And what could go wrong.

In the context of Agentic AI, Critical Thinking is not philosophical; it’s operational. It means interrogating assumptions baked into training data, surfacing dissenting views, and testing systems against edge-case failures. It’s the difference between deploying fast and deploying responsibly.

Structured critical thinking includes:

Red-Teaming

Exposing flaws by stress-testing the system from an adversarial standpoint.

Scenario Modeling

Evaluating how AI behaves under abnormal or ambiguous conditions.

Premortems

Assuming failure occurred, and working backward to identify why.

Multidisciplinary Reviews

Bringing in experts from compliance, ethics, frontline operations, and communities affected by the AI’s outputs

These aren’t optional best practices. They’re institutional safeguards.

“Design thinkers and practitioners are the very people we need to put humans at the heart of AI. They bring the empathy, systems thinking, and ethical grounding required to shape AI that serves people, not just productivity.”

— Roger Rohatgi, Chief AI Officer at Chai

Consider the global COVID-19 response. Models were deployed to forecast infections, deaths, and public health outcomes, informing policy decisions with significant socioeconomic implications. However, when alternative interpretations of mortality data or independent analyses of vaccine trials emerged, they were often dismissed outright, labeled as misinformation, or de-platformed. Dissent was not engaged. It was erased.

Whether one agrees with or disagrees with the dominant narrative, the real issue is this: critical scrutiny was not just underutilized but also punished. That is not resilience. It is fragility masked as certainty.

In these moments, the exclusion of alternative voices becomes not just a failure of diversity but a structural vulnerability.

“The people most harmed by AI are the least likely to be at the table when it’s designed.”

— Dr. Timnit Gebru

Organizations building AI systems must learn from that failure. Trust is not built through confidence. It is built through accountability. And accountability begins with allowing better questions to be asked, even if the answers are uncomfortable.

A New Lifecycle – From Velocity to Thoughtful Deployment

The classic AI lifecycle of Define, Develop, Deploy is optimized for speed, not resilience. It favors execution over examination, code over context. To build Agentic AI systems that last, organizations need a more reflective model.

We propose the Thoughtful AI Lifecycle:

Start with systems mapping and stakeholder identification. Clarify purpose, context, and cross-functional risks.

Co-create prototypes with real users. Stress-test human-machine interactions under realistic conditions.

Introduce structured critique, such as red teams, impact canvases, and ethical scorecards. Invite cross-domain review and stakeholder veto power.

Pilot with embedded observability. Monitor for behavioral drift, experience degradation, and emergent risks.

To embed this lifecycle:

- Use AI Impact Canvases to document trade-offs and transparency requirements.

- Schedule design sprints with operational subject matter experts (SMEs), not just developers.

- Incorporate decision checkpoints in every sprint: “Have we asked the uncomfortable questions?”

- Post-launch, treat every deployment as a long-term learning system, not a short-term win.

Thoughtful velocity isn’t about delay, It’s about discipline and reducing the probability of high-speed failure. Before your next deployment, ask:

Case Study – Redesigning AI for Human Trust

A 2024 peer-reviewed study published in npj | Digital Medicine explored how AI tools influence physician decision-making during chest pain triage. Researchers evaluated whether GPT-4 could support emergency physicians in identifying potential acute coronary syndromes, a domain where clinical decisions carry significant risk and time pressure.

While the AI assistant improved overall decision accuracy, the study also examined potential demographic bias, particularly concerning gender and age. Historical clinical data often reflects underdiagnosis of heart conditions in women, raising the risk that AI trained on such data could perpetuate that inequity. Encouragingly, the study found that when deployed thoughtfully, GPT-4 assistance did not amplify existing biases and could be integrated in a way that supports more equitable clinical judgments.

This example highlights a critical insight. Bias is not just an algorithmic problem. It’s a design and oversight challenge. The absence of bias amplification in this case was not accidental. It was the result of deliberate design choices, structured testing, and interdisciplinary evaluation.

Lesson: Responsible AI doesn’t emerge from good intentions alone. It must be designed, tested, and governed with human consequences in mind.

In 2023, the Detroit Police Department faced scrutiny after the wrongful arrest of Porcha Woodruff, a Black woman who was eight months pregnant, based on a facial recognition match. The AI system misidentified her as a suspect in a carjacking case, leading to her arrest and detention. This incident marked the third known case of a wrongful arrest in Detroit resulting from the flawed use of facial recognition technology.

The case underscores the critical importance of rigorous evaluation and oversight of AI systems, particularly those deployed in high-stakes environments, such as law enforcement. It highlights how AI, if not carefully designed and monitored, can perpetuate and even exacerbate existing societal biases, leading to significant harm.

Lesson: AI systems must be developed and implemented with a strong emphasis on fairness, transparency, and accountability. This involves continuous monitoring, stakeholder engagement, and the integration of ethical considerations throughout the AI lifecycle to build and maintain public trust.

Designing a Responsible Future

The Agentic AI era demands increasingly innovative systems. It also requires wiser organizations. Intelligence without judgment, speed without design, and automation without reflection will not carry enterprises forward. They will expose them.

When embedded as operational disciplines, Design Thinking and Critical Thinking form the scaffolding of responsible transformation. They translate ambition into structure, curiosity into risk controls, and innovation into enduring trust.

In an environment driven by the urge to automate, synthesize, and scale, the leaders who will matter most are those who pause, not to slow down, but to ask better questions before moving forward.

So, before your next AI deployment:

- Don’t just code the solution.

- Design the right questions.

- Deploy with intention, integrity, and awareness.

Because the future of AI isn’t merely autonomous, it must also be accountable.

The organizations that will define this new era won’t be the ones who move first. They’ll be the ones who move deliberately, thinking critically, designing inclusively, and executing with principle.

At Gryphon Citadel, we partner with leadership teams to meet these moments of change with clarity and conviction, transforming risk into resilience, pilots into platforms, and trust into long-term advantage.

Let’s design what’s next. Intentionally, inclusively, and before it’s too late.

Acknowledgments

We want to thank Roger Rohatgi for his thoughtful contributions to this article. As Chief AI Officer at Chai, Roger is at the forefront of advancing Agentic AI by integrating behavioral science, human-centered design, and engineering. He brings decades of leadership experience across global brands, including BP, Verizon, Twitter, Hyundai, and Motorola Solutions, where he has driven digital transformation and elevated the role of design in shaping intelligent systems. Roger’s award-winning portfolio spans inclusive and sustainable design, augmented intelligence, and cross-media storytelling, with recognition from Oracle, the international film community, and leading publications such as Fast Company, Wired, USA Today, and Forbes. His contributions to this article underscore his commitment to building AI that reflects human values, not just technical sophistication.

We also extend our gratitude to José Coronado for his valuable contribution to this piece. A seasoned design and operations executive, José has led and scaled UX and DesignOps capabilities across various industries, including financial services, consulting, retail, and enterprise technology. He built Target’s Strategic Planning & Operations team from the ground up, designing it from scratch and establishing business processes, as well as hiring leadership to support organizational growth. At JPMorgan Chase, he served as Executive Director of Design Operations, helping expand and operationalize one of the largest design organizations in the financial sector. His consulting experience includes work with McKinsey, Accenture, Bain Capital, and AIG, and his career includes leadership roles at Oracle, ADP, and AT&T. A frequent international speaker and workshop facilitator, José’s insights reflect a deep commitment to elevating design as a driver of ethical, operational, and strategic impact.

About Gryphon Citadel

Gryphon Citadel is a management consulting firm headquartered in Philadelphia, PA, with a European office in Zurich, Switzerland. Known for our strategic insight, our team delivers invaluable advice to clients across various industries. Our mission is to empower businesses to adapt and flourish by infusing innovation into every aspect of their operations, leading to tangible, measurable results. Our comprehensive service portfolio includes strategic planning and execution, digital and organizational transformations, performance enhancement, supply chain and manufacturing optimization, workforce development, operational planning and control, and advanced information technology solutions.

At Gryphon Citadel, we understand that every client has unique needs. We tailor our approach and services to help them unlock their full potential and achieve their business objectives in the rapidly evolving market. We are committed to making a positive impact not only on our clients but also on our people and the broader community. At Gryphon Citadel, we transcend mere adaptation; we empower our clients to architect their future. Success isn’t about keeping pace; it’s about reshaping the game itself. The question isn’t whether you’ll be part of what’s next—it’s whether you’ll define it.

Our team collaborates closely with clients to develop and execute strategies that yield tangible results, helping them to thrive amid complex business challenges. Let’s set the new standard together. If you’re looking for a consulting partner to guide you through your business hurdles and drive success, Gryphon Citadel is here to support you.

Explore what we can achieve together at www.gryphoncitadel.com